Building My Own Chatbot with Dify: July's Top Repo on GitHub

I take a look at Dify, one of the top repos on GitHub’s explore feed. Dify is an LLM development platform where you can build RAG chatbots.

I think of Github’s explore feed as a litmus test for tech to have reached a popularity critical mass. If you’re out of the loop -like I often feel I am- it’s a good spot to see where the software development hype cycle is currently. Thanks to the sheer number of devs using the site, interesting repos will bubble to the surface thanks to people starring it for later use. Let’s see what’s trending at the moment.

Top repo: Dify an LLM development platform

Today is July 26th, and the top trending repo -unsurprisingly- has to do with AI. It’s Dify, an LLM (Large Language Model) chatbot development platform. Since I’ve been digging into vector search ([[Vector Databases - Basics of vector databases & search]]) recently, Dify’s RAG pipeline caught my attention. If you’re unfamiliar, RAG is one of the latest buzzword I’ve been seeing around the internet. It stands for Retrieval Augmented Generation, which is connecting chatbots to a vector database for long term memory and as a fact dictionary.

Practical applications

I use LLMs fairly heavily, mainly as partners for writing ideation, learning or just mundane dev tasks. Commercial application for us little guys though seem to be difficult. My bread and butter is B2B applications and eCommerce, both of which are information-heavy.

You could hook a chatbot up to your site, but each customer question is a new challenge. Misunderstand the customer’s problem, hallucinate a fact about a product or simply suggest the competitions product? You’re more trouble than you’re worth.

But how about custom training your own model and at the same time providing it with factual information about your products and services? That’s a different proposition entirely.

Trying out Dify

Dify sound like a solution for the problems of chatbots not having access to the right facts. It also has a fairly feature rich editor that lets us build custom flows for our chatbots. We can query a local database or pull in data from external source, such as an API. Let’s take it for a spin.

Thankfully the repo quickly points us to a Self-hosting guide (https://docs.dify.ai/getting-started/install-self-hosted), something that’s another hobby of mine.

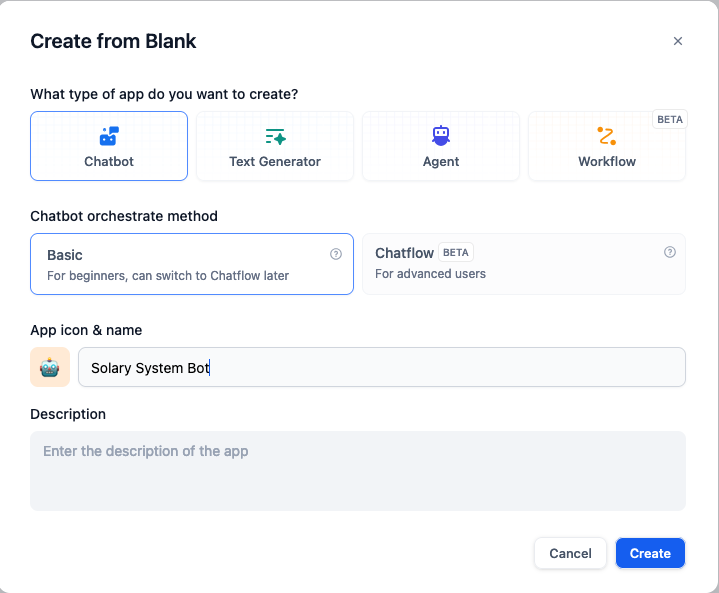

Once the setup is done and I have Dify running on my laptop or my home server, I’m greeted with an interface to create, train and test my own chatbots. Dify lets me create various generative AI tools, but we'll focus on chatbots for now. I want to set up a simple bot the tell me about the solar system, so I'll go through the creation flow with the default values:

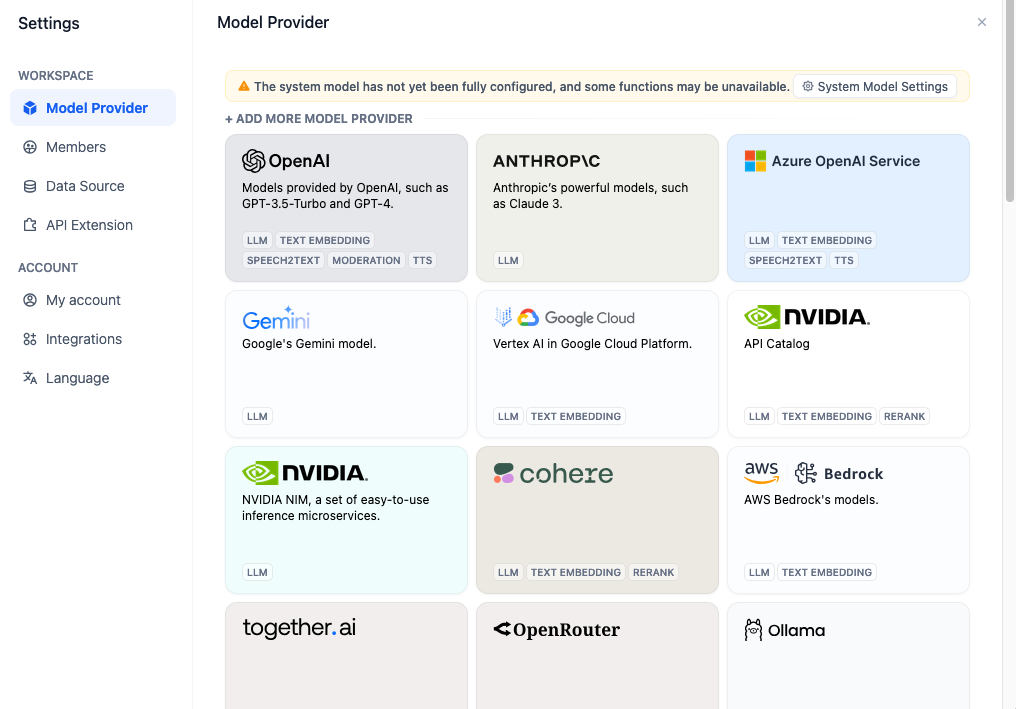

This will create the bot and show me the interface to start customising it. However, I'll first need to connect an LLM provider. This will be the engine that runs our chatbot, generating responses based on our guidelines. By now OpenAI is not the only player here. We have an abundant choice of providers to base our chatbot's on. These are basically the APIs provided by OpenAI, Anthropic, google, and many others.

Initial setup

To keep it simple, I'll set up OpenAI, since they're still the gold standard when it comes to chat performance and quality. At this point though there are competitive open source, self-hostable version available via Ollama. I highly recommend checking it out if you have an interest in self-hosted generative AI.

To set the provider up, I need to provide an OpenAI API key. Thankfully Dify points us in the direction of how to get one.

Video of using Dify's interface to create a chatbot & connect OpenAI's API

After this I'm ready to converse with the bot right away. However at this point this will be a standard ChatGPT chatbot with no custom instructions or knowledge.

Video of testing out Dify's chatbot debugging interface

When I asked it about Jupiter's moons, it provided this information:

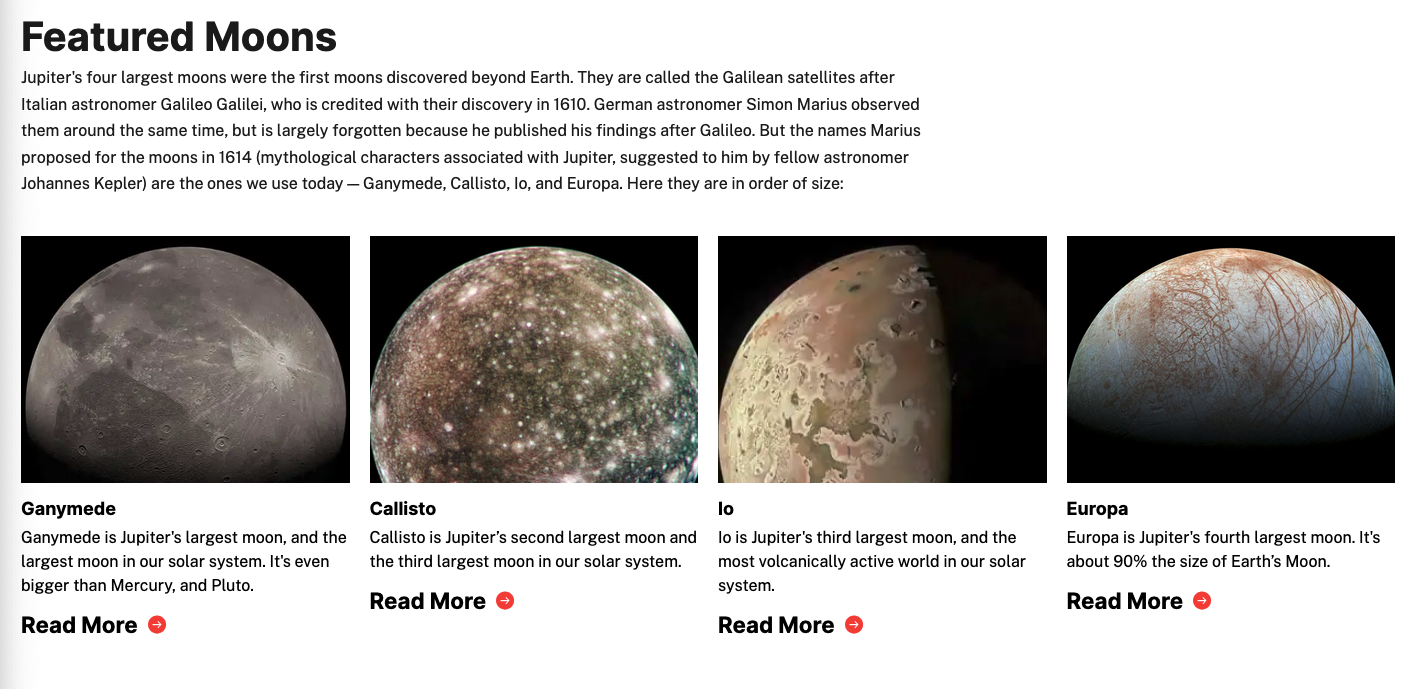

This is partially incorrect information. A quick check of NASA's page about the Moons of Jupiter tells me that there are 95 known moons in orbit around Jupiter.

Funnily enough, the same 4 moons mentioned by the bot are the ones feature on NASA's site. These are indeed the most well-known celestial bodies around the gas giant, but it could be a sign that ChatGPT's training included this site too ;)

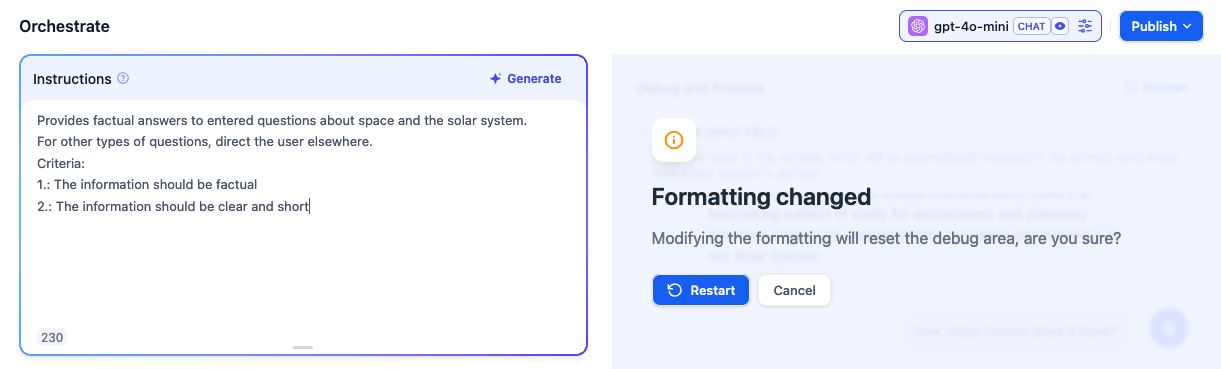

I want to make the bot make more concise answers and stick to facts, so I'll update it's instructions to provide verified knowledge only. This prompts me to restart the bot, after which I’m ready to go:

Let's ask again:

Things got better. Now we know that it's believes it's knowledge is outdated, but we haven't discovered 16 new moons around Jupiter since last year. What if we gave the bot access to search the up-to-date facts instead? Dify lets us do this using it's 'knowledge' feature.

Customizing the chatbot and giving it 'knowledge'

The problem we're currently facing, is that even through we give the bot instructions, we cannot give it facts that it wasn't trained on. Also, the data it got trained on are also not guaranteed to be correctly replicated, when it is asked to generate text. Remember, generative AI is just a text prediction engine. It cannot reason or use logic. It can only replicate what it has seen used in written text.

Let's give it access to the NASA's page we looked at earlier. This is where Dify’s knowledge feature comes in. Since Dify cannot directly parse a webpage and needs a parser, we‘ll instead upload static files to it. We’ll create a PDF version of the NASA site and see if it can extract the information it needs from there.

Creating 'knowledge' in Dify means we’ll create a dataset from files and pages containing information about a given topic. We can upload various types of data in here, Dify will accept most common file formats. After we upload the data, we need to turn it into Vector Embeddings. This is so that we can use vector search to find results based on relevancy to the search query. I've written about Vector Search and relevancy before, check it out here.

The process of adding knowledge to Dify is fairly straightforward:

Video showing the creation of a dataset about the planet Jupiter using NASA's site

After adding the PDF of Nasa's site, it goes through an indexing process. The text is read, broken up into smaller chunks and stored in a vector database. Based on a few settings, it will return relevant or matching results out of the databased. Let's see if we can get our bot to use factual info.

Video showing a chat with a bot using the previously created 'knowledge' about Jupiter, still incorrect

Somewhat better, once asked a direct question, the bot now checks the knowledge it has. However, it still come up with an incorrect number when it didn't feel like it should rely on the provided context. Let’s tweak the instructions to force it to check facts each time it needs to generate a response.

To do this, we need to build a custom workflow, instead of relying on the bot deciding to check it’s knowledge base when it deems it needs to.

Orchestrating multi-step answer generation

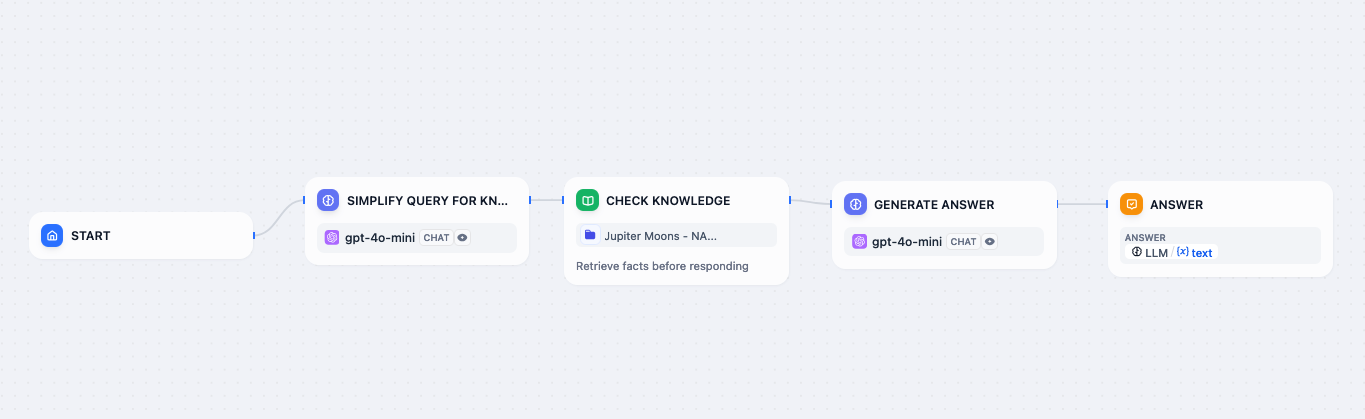

Aside from a basic chatbot, which let’s use customize the prompt and add optional knowledge, we can ‘orchestrate’ custom flows in Dify. This means that instead of one step to generate an answer, we can define a multi step flow. This can include steps to fetch knowledge, call an api, categorize queries based on custom parameters and similar other steps.

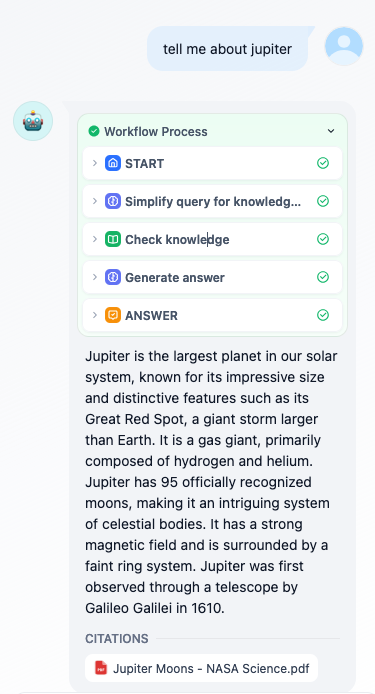

Let's switch to the orchestration workflow so we can have a more fine-grained control over our chatbot. Here we can define the flow of what the chatbot does in the form of nodes in a flow. I'll start by telling the bot to always check the provided knowledge first, before creating an answer.

Video showing the upgrade from the basic flow to orchestration chatbot creation flow

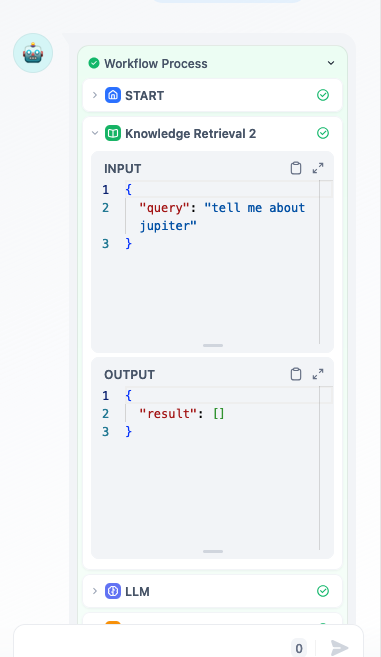

No luck, the bot still doesn't give me the right info. When inspecting what happends during the knowledge retrieval process, it turns out, our knowledge is queried directly with my question and ends up not returning any results.

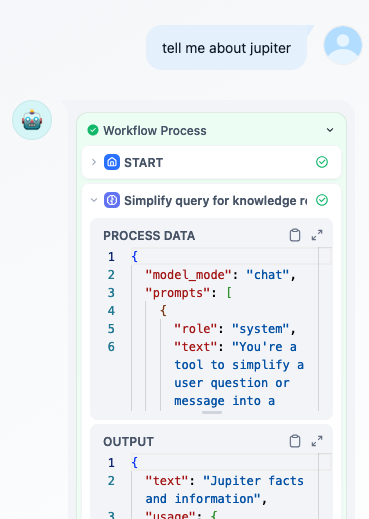

Let's see if we can pre-process the question into a better search query to find what we need. I'll use a multi-step process to create an answers for us. First, we'll pre-process our query to serve as a search term for knowledge database. This means asking a different chatbot to only simplify the user query into a search term. Afterwards, we can plug this data into our answer generation step.

In the simplify step, I've asked ChatGPT to pre-process my query into a search term. Then, this search term is used for data retrieval from our database. Instead of my initial query, this step narrowed my query "tell me about jupiter" into a "Jupiter facts and information" query.

This successfully return results from my knowledge base. This also means that the chatbot now based its answer on factual information.

Bingo! We now get a correct answer about the number of moons, even without asking!

I'm frankly baffled at the scale and scope of this tool, especially since it was created slightly over a year ago. I can't wait to see what workflows are possible with this tool.

Where do we go from here?

I've followed the same flow to build a chatbot for my personal site. I loaded it up with my CV & experience to see if I could make it give relevant answers to visitors. Since Dify itself allows you to publish and embed a chatbot directly on your website, I've gone ahead and published it.

Here's a video showing the process the bot goes through to assemble an answer.

- First, we check for question relevancy and sort questions based on whether is an irrelevant message or a valid question.

- If it's an irrelevant query, we come up with a witty remark and steer the user back to more relevant topics.

- If it's a relevant query, we simplify it to check our 'knowledge' base, in this case my CV and past projects.

- Based on the knowledge retrieved, we finally assemble an answer.

Video showing the custom flows built using Dify for the chatbot running on my personal site

If you like what I do, buy me a beer: